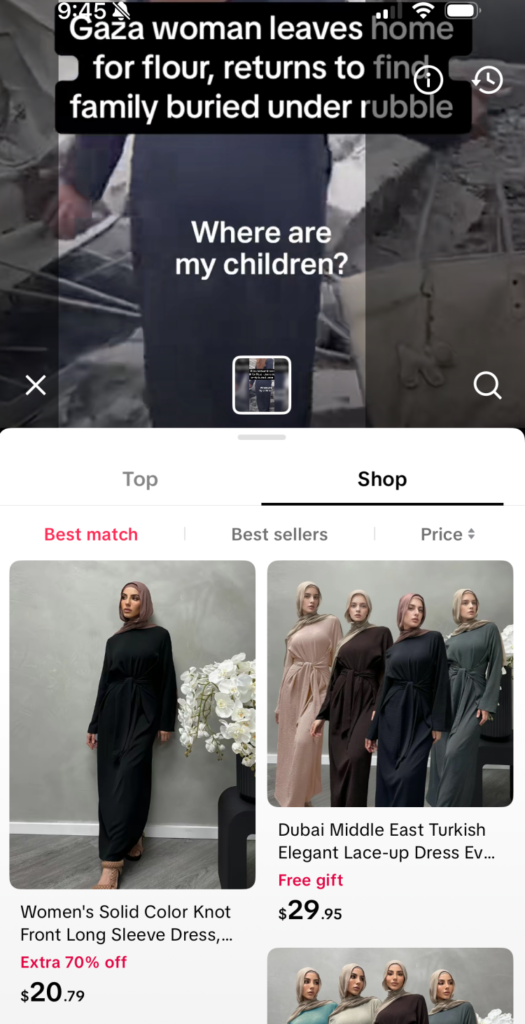

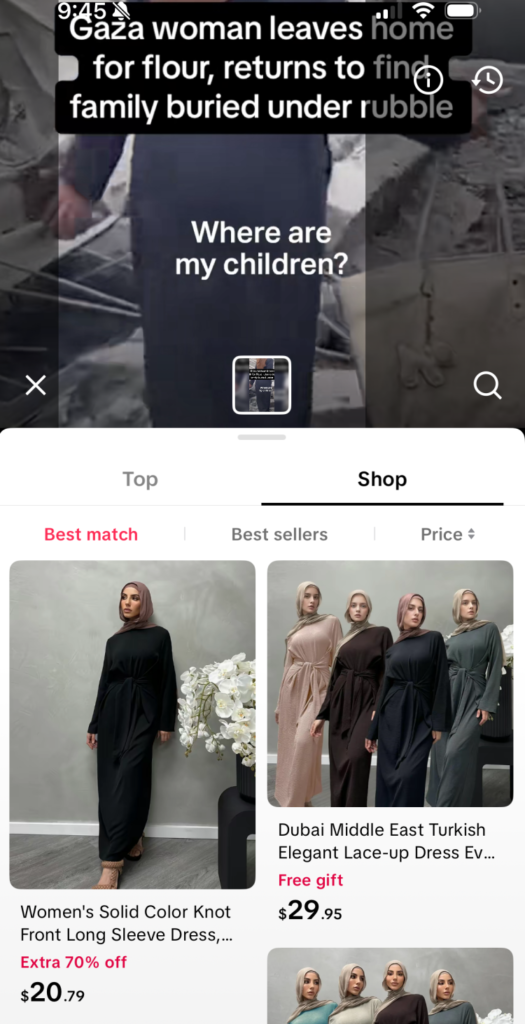

Imagine a scene of profound human loss. A woman in Gaza, her voice cracking with desperation, searches through the rubble of what was once her home. She cries out for her missing daughters, husband, and cousin. This raw footage, a stark document of real-world tragedy, appears on your TikTok feed.

Then, the platform prompts you to “Find Similar.”

This isn’t a hypothetical scenario. It’s a real feature TikTok is rolling out—an AI-powered visual search tool that scans any video and identifies objects to suggest similar products for sale on TikTok Shop. In the case of the Palestinian woman, the algorithm pinpointed her dress, headscarf, and handbag, offering users links to purchase “Elegant Lace-Up Dresses” and “Women’s Solid Color Knot Front” apparel.

As a digital ethics analyst, I see this as more than a technical misstep. It’s a critical moment that reveals the fundamental, and often unsettling, reality of today’s social media ecosystem: every piece of content, regardless of its intent or context, is being transformed into a potential point-of-sale.

Deconstructing the “Find Similar” Feature: Convenience at What Cost?

TikTok describes this new feature as “visual search tags,” a logical extension of user behavior. People already screenshot items they like to search for them online. This simply automates the process. The company even includes a disclaimer about potential AI errors.

However, this framing misses the core issue. The problem isn’t just occasional inaccuracy; it’s the systematic inability to understand human context.

The AI doesn’t distinguish between a fashion haul video and a document of humanitarian crisis. Its sole purpose is to identify shapes, colors, and patterns and convert them into commercial opportunities. This creates jarring and deeply insensitive experiences, as seen when the same feature appeared on a video from Ms. Rachel, a beloved children’s educator who was advocating for children in Gaza. While she spoke of starvation and airstrikes, the algorithm highlighted her blue-and-white-striped dress for purchase.

The Two-Tiered Problem: Ethical Erosion and the “Endless Mall”

The implications of this feature extend far beyond a single insensitive product recommendation. The problem is twofold:

- The Context Blind Spot: The most immediate failure is ethical. There is content online that should be sacred from commercial exploitation. Grief, tragedy, activism, and personal moments of vulnerability are not shopping opportunities. By applying a commercial layer to every video, platforms like TikTok are eroding the digital spaces we reserve for empathy, information, and human connection. Users are transformed into unwitting billboards, their most difficult moments mined for sales potential.

- The Architectural Shift to an “Endless Mall”: On a broader scale, this feature represents the final removal of the curtain. For years, platforms have blurred the lines between community and commerce. Now, the pretense is gone. The “Find Similar” tool makes it explicit that the primary function of your scroll is not just to connect or entertain, but to monetize your attention. The digital town square is being relentlessly redesigned into a frictionless, endless shopping mall where every interaction can be tracked and monetized.

What This Means for Users and Content Creators: Taking Back Control

While the feature can feel omnipotent, users and creators are not entirely powerless. Here’s what you can do:

- For Viewers: Be critically aware of this layer of commercial intent. Understand that the platform is constantly analyzing what you watch to sell to you. This awareness is the first step in consuming content more mindfully.

- For Creators: TikTok allows you to disable the “Find Similar” feature for your own videos. In your account settings, under Privacy > Suggest your content to others, you can turn off “Display related items.” This is a crucial step for creators who discuss sensitive topics or simply do not wish their content to be used for product discovery.

- For All of Us: This serves as a pressing reminder to question the architecture of the platforms we use daily. Who does this feature truly serve? Does it add genuine value, or does it simply extract it?

The Uncomfortable Honesty of a Hyper-Commercialized Web

In a strange way, TikTok’s “Find Similar” feature is brutally honest. It doesn’t hide its ambitions. It confirms what critics have long argued: that our online experiences are being meticulously engineered around transactions.

The challenge now is whether users will accept this “endless mall” as the inevitable future of the internet, or whether we will begin to demand digital spaces that respect context, honor human emotion, and value connection over commerce. The choice, for now, is still ours to make.